Loading...

Searching...

No Matches

#include <string.h>#include <stdlib.h>#include <stdio.h>#include "ax_helm_kernel.h"#include <device/device_config.h>#include <device/cuda/check.h>#include <common/neko_log.h>Go to the source code of this file.

Macros | |

| #define | CASE_1D(LX) |

| #define | CASE_KSTEP(LX) |

| #define | CASE_KSTEP_PADDED(LX) |

| #define | CASE(LX) |

| #define | CASE_PADDED(LX) |

| #define | CASE_LARGE(LX) |

| #define | CASE_LARGE_PADDED(LX) |

| #define | CASE_VECTOR_KSTEP(LX) |

| #define | CASE_VECTOR_KSTEP_PADDED(LX) |

| #define | CASE_VECTOR(LX) |

| #define | CASE_VECTOR_PADDED(LX) |

Functions | |

| template<const int > | |

| int | tune (void *w, void *u, void *dx, void *dy, void *dz, void *dxt, void *dyt, void *dzt, void *h1, void *g11, void *g22, void *g33, void *g12, void *g13, void *g23, int *nelv, int *lx) |

| template<const int > | |

| int | tune_padded (void *w, void *u, void *dx, void *dy, void *dz, void *dxt, void *dyt, void *dzt, void *h1, void *g11, void *g22, void *g33, void *g12, void *g13, void *g23, int *nelv, int *lx) |

| void | cuda_ax_helm (void *w, void *u, void *dx, void *dy, void *dz, void *dxt, void *dyt, void *dzt, void *h1, void *g11, void *g22, void *g33, void *g12, void *g13, void *g23, int *nelv, int *lx) |

| void | cuda_ax_helm_vector (void *au, void *av, void *aw, void *u, void *v, void *w, void *dx, void *dy, void *dz, void *dxt, void *dyt, void *dzt, void *h1, void *g11, void *g22, void *g33, void *g12, void *g13, void *g23, int *nelv, int *lx) |

| void | cuda_ax_helm_vector_part2 (void *au, void *av, void *aw, void *u, void *v, void *w, void *h2, void *B, int *n) |

Macro Definition Documentation

◆ CASE

Value:

CASE_KSTEP(LX); \

} \

break

__global__ void T *__restrict__ T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ w

Definition ax_helm_full_kernel.h:48

__global__ void T *__restrict__ T *__restrict__ const T *__restrict__ u

Definition ax_helm_full_kernel.h:46

__global__ void T *__restrict__ T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ dx

Definition ax_helm_full_kernel.h:49

__global__ void T *__restrict__ T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ dz

Definition ax_helm_full_kernel.h:51

__global__ void T *__restrict__ T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ h1

Definition ax_helm_full_kernel.h:52

__global__ void T *__restrict__ T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ dy

Definition ax_helm_full_kernel.h:50

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ dzt

Definition cdtp_kernel.h:112

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ dyt

Definition cdtp_kernel.h:111

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ dxt

Definition cdtp_kernel.h:110

__global__ void dirichlet_apply_scalar_kernel(const int *__restrict__ msk, T *__restrict__ x, const T g, const int m)

Definition dirichlet_kernel.h:42

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ g23

Definition ax_helm_kernel.h:161

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ g22

Definition ax_helm_kernel.h:157

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ g13

Definition ax_helm_kernel.h:160

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ g12

Definition ax_helm_kernel.h:159

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ g33

Definition ax_helm_kernel.h:158

__global__ void const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ const T *__restrict__ g11

Definition ax_helm_kernel.h:156

◆ CASE_1D

Value:

◆ CASE_KSTEP

◆ CASE_KSTEP_PADDED

◆ CASE_LARGE

◆ CASE_LARGE_PADDED

◆ CASE_PADDED

◆ CASE_VECTOR

◆ CASE_VECTOR_KSTEP

Value:

__global__ void T *__restrict__ T *__restrict__ const T *__restrict__ const T *__restrict__ v

Definition ax_helm_full_kernel.h:47

◆ CASE_VECTOR_KSTEP_PADDED

Value:

◆ CASE_VECTOR_PADDED

Function Documentation

◆ cuda_ax_helm()

| void cuda_ax_helm | ( | void * | w, |

| void * | u, | ||

| void * | dx, | ||

| void * | dy, | ||

| void * | dz, | ||

| void * | dxt, | ||

| void * | dyt, | ||

| void * | dzt, | ||

| void * | h1, | ||

| void * | g11, | ||

| void * | g22, | ||

| void * | g33, | ||

| void * | g12, | ||

| void * | g13, | ||

| void * | g23, | ||

| int * | nelv, | ||

| int * | lx | ||

| ) |

Fortran wrapper for device CUDA Ax

Definition at line 63 of file ax_helm.cu.

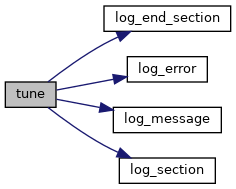

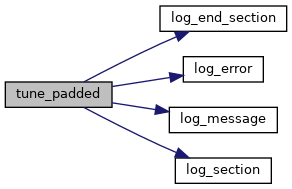

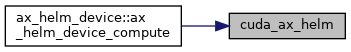

Here is the call graph for this function:

Here is the caller graph for this function:

◆ cuda_ax_helm_vector()

| void cuda_ax_helm_vector | ( | void * | au, |

| void * | av, | ||

| void * | aw, | ||

| void * | u, | ||

| void * | v, | ||

| void * | w, | ||

| void * | dx, | ||

| void * | dy, | ||

| void * | dz, | ||

| void * | dxt, | ||

| void * | dyt, | ||

| void * | dzt, | ||

| void * | h1, | ||

| void * | g11, | ||

| void * | g22, | ||

| void * | g33, | ||

| void * | g12, | ||

| void * | g13, | ||

| void * | g23, | ||

| int * | nelv, | ||

| int * | lx | ||

| ) |

Fortran wrapper for device CUDA Ax vector version

Definition at line 184 of file ax_helm.cu.

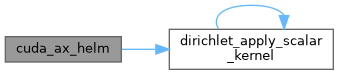

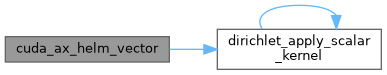

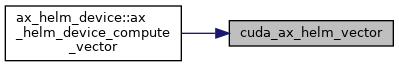

Here is the call graph for this function:

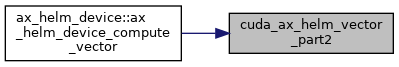

Here is the caller graph for this function:

◆ cuda_ax_helm_vector_part2()

| void cuda_ax_helm_vector_part2 | ( | void * | au, |

| void * | av, | ||

| void * | aw, | ||

| void * | u, | ||

| void * | v, | ||

| void * | w, | ||

| void * | h2, | ||

| void * | B, | ||

| int * | n | ||

| ) |

Fortran wrapper for device CUDA Ax vector version part2

Definition at line 254 of file ax_helm.cu.

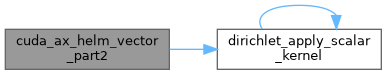

Here is the call graph for this function:

Here is the caller graph for this function: